ARCHIVED: Run batch jobs on Big Red II

On this page:

- Overview

- Prepare a TORQUE script for a Big Red II batch job

- Example serial job scripts

- Example MPI job scripts

- Submit, monitor, and delete jobs

- Get help

Big Red II was retired from service on December 15, 2019; for more, see ARCHIVED: About Big Red II at Indiana University (Retired).

Overview

To run a batch job on Big Red II at Indiana University, first prepare a TORQUE script that specifies the application you want to run, the execution environment in which it should run, and the resources your job will require, and then submit your job script from the command line using the TORQUE qsub command. You can monitor your job's progress using the TORQUE qstat command, the Moab showq command, or via the HPC everywhere beta.

Because Big Red II runs the Cray Linux Environment (CLE), which comprises two distinct execution environments for running batch jobs (Extreme Scalability Mode and Cluster Compatibility Mode), TORQUE scripts used to run jobs on typical high-performance computing clusters (for example, Karst) will not work on Big Red II without modifications. For your application to run properly in either of Big Red II's execution environments, your TORQUE script must invoke one of two proprietary application launch commands:

| Execution environment | Application launch command |

|---|---|

| Extreme Scalability Mode (ESM) |

The Use |

| Cluster Compatibility Mode (CCM) |

The To run a CCM job, you first must load the -l gres=ccm |

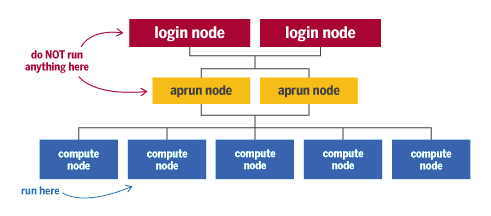

Applications launched without the aprun or ccmrun command will not be placed on Big Red II's computation nodes. Instead, they will execute on the aprun service nodes, which are intended only for passing job requests. Because the aprun nodes are shared by all currently running jobs, any memory- or computationally-intensive job launched there will be terminated to avoid disrupting service for every user on the system.

|

For more CLE execution environments in CLE, see ARCHIVED: Execution environments on Big Red II at IU: Extreme Scalability Mode (ESM) and Cluster Compatibility Mode (CCM).

Prepare a TORQUE script for a Big Red II batch job

A TORQUE job script is a text file you prepare that specifies the application to run and the resources required to run it.

"Shebang" line

Your TORQUE job script should begin with a "shebang" (#!) line that provides the path to the command interpreter the operating system should use to execute your script.

A basic job script for Big Red II could contain only the "shebang" line followed by an executable line; for example:

#!/bin/bash aprun -n 1 date

The above example script would run one instance of the date application on a compute node in the Extreme Scalability Mode (ESM) execution environment.

TORQUE directives

Your job script also may include TORQUE directives that specify required resources, such as the number of nodes and processors needed to run your job, and the wall-clock time needed to complete your job. TORQUE directives are indicated in your script by lines that begin with #PBS; for example:

#PBS -l nodes=2:ppn=32:dc2 #PBS -l walltime=24:00:00

The above example TORQUE directives indicate a job requires two nodes, 32 processors per node, the Data Capacitor II parallel file system (/N/dc2), and 24 hours of wall-clock time.

Scripts for CCM jobs must include the following TORQUE directive:

#PBS -l gres=ccm

Executable lines

Executable lines are used to invoke basic commands and launch applications; for example:

module load ccm module load sas cd /N/u/davader/BigRed2/sas_files ccmrun sas SAS_input.sas

The above example executable lines load the ccm and sas modules, change the working directory to the location of the SAS_input.sas file, and launch the SAS application on a compute node in the CCM execution environment.

aprun for ESM jobs; ccmrun for CCM jobs), or else your application will launch on an aprun service node instead of a compute node. Launching an application on an aprun node can cause a service disruption for all users on the system. Consequently, any job running on an aprun node will be terminated.

Example serial job scripts

Run an ESM serial job

To run a compiled program on one or more compute nodes in the Extreme Scalability Mode (ESM) environment, the application execution line in your batch script must begin with the aprun application launching command.

The following example batch script will run a serial job that executes my_binary on all 32 cores of one compute node in the ESM environment:

#!/bin/bash #PBS -l nodes=1:ppn=32 #PBS -l walltime=00:10:00 #PBS -N my_job #PBS -q cpu #PBS -V aprun -n 32 my_binary

Run a CCM serial job

To run a compiled program on one or more compute nodes in the CCM environment:

- You must add the

ccmmodule to your user environment with thismodule loadcommand:module load ccm

You can add this line to your TORQUE batch job script (after your TORQUE directives and before your application execution line). Alternatively, to permanently add the

ccmmodule to your user environment, add the line to your~/.modulesfile; see ARCHIVED: Use a .modules file in your home directory to save your user environment on an IU research supercomputer. - Your script must include a TORQUE directive that invokes the

-l gres=ccmflag. Alternatively, when you submit your job, you can add the-l gres=ccmflag as a command-line option toqsub. - The application execution line in your batch script must begin with the

ccmrunapplication launching command.

The following example batch script will run a serial job that executes my_binary on all 32 cores of one compute node in the CCM environment:

#!/bin/bash #PBS -l nodes=1:ppn=32 #PBS -l walltime=00:10:00 #PBS -N my_job #PBS -q cpu #PBS -l gres=ccm #PBS -V ccmrun my_binary

ppn=32 or ppn=16 to ensure full access to all the cores on a node. Single-processor applications will not use more than one core. To pack multiple single-processor jobs onto a single node using PCP, see Use PCP to bundle multiple serial jobs to run in parallel on IU research supercomputers

Example MPI job scripts

Run an ESM MPI job

You can use the aprun command to run MPI jobs in the ESM environment. The aprun command functions similarly to the mpirun and mpiexec commands commonly used on high-performance computing clusters, such as IU's Karst system.

The following example batch script will run a job that executes my_binary on two nodes and 64 cores in the ESM environment on Big Red II:

#!/bin/bash #PBS -l nodes=2:ppn=32 #PBS -l walltime=00:10:00 #PBS -N my_job #PBS -q cpu #PBS -V aprun -n 64 my_binary

Run a CCM MPI job

To run MPI jobs in the CCM environment:

- Your code must be compiled with the CCM Open MPI library; to add the library to your environment, load the

openmpimodule.To load the

openmpimodule, you must have the GNU programming environment (PrgEnv-gnu) module loaded. To verify that thePrgEnv-gnumodule is loaded, on the command line, runmodule list, and then review the list of currently loaded modules. If another programming environment module (for example,PrgEnv-cray) is loaded, use themodule swapcommand to replace it with thePrgEnv-gnumodule; for example, on the command line, enter:module swap PrgEnv-cray PrgEnv-gnu

- You must add the

ccmmodule to your user environment. To permanently add theccmmodule to your user environment, add themodule load ccmline to your~/.modulesfile; see ARCHIVED: Use a .modules file in your home directory to save your user environment on an IU research supercomputer. Alternatively, you can add themodule load ccmcommand as a line in your TORQUE batch job script (after your TORQUE directives and before your application execution line). - Your job script must include a TORQUE directive that invokes the

-l gres=ccmflag. Alternatively, when you submit your job, you can add the-l gres=ccmflag as a command-line option toqsub. - The application execution line in your batch script must begin with the

ccmrunapplication launch command.

Assuming the ccm module is already loaded, the following example batch script will run a job that loads the openmpi/ccm/gnu/1.7.2 module and executes my_binary on two nodes and 64 cores in the CCM environment on Big Red II:

#!/bin/bash #PBS -l nodes=2:ppn=32 #PBS -l walltime=00:10:00 #PBS -l gres=ccm #PBS -N my_job #PBS -q cpu #PBS -V module load openmpi/ccm/gnu/1.7.2 ccmrun mpirun -np 64 my_binary

Submit, monitor, and delete jobs

Submit jobs

To submit a job script (for example, my_job_script.pbs), use the TORQUE qsub command:

qsub [options] my_job_script.pbs

For a full description of the qsub command and available options, see its manual page.

Monitor jobs

To monitor the status of a queued or running job, you can use the TORQUE qstat command. Useful options include:

| Option | Function |

|---|---|

-u user_list |

Displays jobs for users listed in user_list |

-a |

Displays all jobs |

-r |

Displays running jobs |

-f |

Displays full listing of jobs (returns excessive detail) |

-n |

Displays nodes allocated to jobs |

For example, to see all the jobs running in the long queue, use:

qstat -r long | less

The Moab job scheduler also provides several useful commands for monitoring jobs and batch system information:

| Moab command | Function |

|---|---|

showq

|

Display the jobs in the Moab job queue. (Jobs may be in a number of states; "running" and "idle" are the most common.) |

checkjob jobid

|

Check the status of a job (jobid). For verbose mode,

add -v (for example,

checkjob -v jobid).

|

showstart jobid

|

Show an estimate of when your job (jobid) might start.

|

mdiag -f

|

Show fairshare information. |

checknode node_name

|

Check the status of a node (node_name).

|

showres

|

Show current reservations. |

showbf

|

Show intervals and node counts presently available for backfill jobs. |

For example, to list queued jobs in the order they were dispatched, on the command line, enter:

showq -i | less

For a full description of the showq command and available options, see its manual page.

Alternatively, you can monitor your job via the HPC everywhere beta.

Directly monitor processes on CCM nodes

If you have a job running in the CCM execution environment, you can SSH directly to the compute node(s) on which it is running, and from there use the ps command and/or top program to monitor the number of processes on the node, the status of each process, and the percentage of memory and CPU usage per process. If necessary, you can use the kill command to kill or suspend processes.

To SSH directly to a compute node running your CCM job:

- Determine which node is running your CCM job:

- On the command line, enter (replacing

jobidwith your job ID):qstat -f jobid

If you don't remember your job ID, look it up with the

qstatcommand; on the command line, enter (replaceusernamewith your IU username):qstat -u username

- Derive the compute node's name from the

exec_hostvalue listed in the output. Node names on Big Red II have five numerical characters, so prepend zeroes (as needed) to any values with fewer than five characters; for example:- If your job is running on node

nid00786, you will see:exec_host = 786/0

- If your job is running on node

nid00023, you will see:exec_host = 23

If your job is running across multiple hosts, you'll see multiple values for

exec_host. As long as you are the owner of the CCM job, you'll be able to access any of the nodes on which it is running. - If your job is running on node

- On the command line, enter (replacing

- SSH to one of the

aprunservice nodes; on the command line, enter:ssh aprun1

When prompted for a password, enter your IU passphrase.

- From the

aprunnode, connect via SSH to port 203 on the desired compute node; for example, to connect to nodenid00786, on the command line, enter:ssh -p 203 nid00786

For information about using ps, top, and kill to monitor and manage processes, see their respective manual pages.

Delete jobs

To delete queued or running jobs, use the TORQUE qdel command:

| Command | Function |

|---|---|

qdel jobid |

Delete a specific job (jobid). |

qdel all |

Delete all jobs. |

Occasionally, a node becomes unresponsive and won't respond to the TORQUE server's requests to delete a job. If that occurs, add the -W (uppercase W) option:

qdel -W jobid

If that doesn't work, email the High Performance Systems group for help.

Get help

Although UITS Research Technologies cannot provide dedicated access to an entire research supercomputer during the course of normal operations, "single user time" is made available by request one day a month during each system's regularly scheduled maintenance window to accommodate IU researchers with tasks requiring dedicated access to an entire compute system. To request "single user time" or ask for more information, contact UITS Research Technologies.

Research computing support at IU is provided by the Research Technologies division of UITS. To ask a question or get help regarding Research Technologies services, including IU's research supercomputers and research storage systems, and the scientific, statistical, and mathematical applications available on those systems, contact UITS Research Technologies. For service-specific support contact information, see Research computing support at IU.

This is document bdkt in the Knowledge Base.

Last modified on 2023-04-21 16:57:19.