ARCHIVED: Running a parallel matrix multiplication program using CUDA on FutureGrid

GPUs provide the ability to use mathematical operations at a fraction of the cost and with higher performance than on the current generation of processors. CUDA is a parallel programming model and software environment that leverages the parallel computational power of GPU for non-graphics computing in a fraction of the time required on a CPU. FutureGrid provides the ability to test such a hardware and software environment as part of its Delta cluster. We illustrate some details of data-parallel computational model of CUDA and then we provide a step-by-step guide on how to make a parallel matrix multiplication program using CUDA. We also provide the complete code that has already been tested on Delta node in attachment.

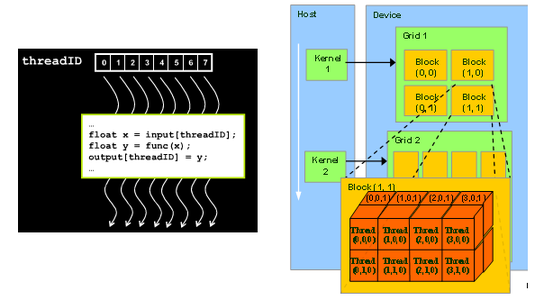

GPU Kernel and Thread model

CUDA kernel and threads

The fundamental part of the CUDA code is the kernel program. Kernel is the function that can be executed in parallel in the GPU device. A CUDA kernel is executed by an array of CUDA threads. All threads run the same code. Each thread has an ID that it uses to compute memory addresses and make control decisions. CUDA supports running thousands of threads on the GPU. CUDA organizes thousands of threads into a hierarchy of a grid of thread blocks. A grid is a set of thread blocks that can be processed on the device in parallel. A thread block is a set of concurrent threads that can cooperate among themselves through synchronization barriers and access to a shared memory space private to the block. Each thread is given a unique thread ID, "thread.Idx" within its thread block. Each thread block is given a unique block ID, "block.Idx" within its grid.

CUDA kernel code for matrix multiplication

__global__ void matrixMul( float* C, float* A, float* B, int wA, int wB)

{

// Block index

int bx = blockIdx.x;

int by = blockIdx.y;

// Thread index

int tx = threadIdx.x;

int ty = threadIdx.y;

// Index of the first sub-matrix of A processed by the block

int aBegin = wA * BLOCK_SIZE * by;

// Index of the last sub-matrix of A processed by the block

int aEnd = aBegin + wA - 1;

// Step size used to iterate through the sub-matrices of A

int aStep = BLOCK_SIZE;

// Index of the first sub-matrix of B processed by the block

int bBegin = BLOCK_SIZE * bx;

// Step size used to iterate through the sub-matrices of B

int bStep = BLOCK_SIZE * wB;

// Csub is used to store the element of the block sub-matrix that is computed by the thread

float Csub = 0;

// Loop over all the sub-matrices of A and B required to compute the block sub-matrix

for (int a = aBegin, b = bBegin; a <= aEnd; a += aStep, b += bStep) {

// Declaration of the shared memory array As used to store the sub-matrix of A

__shared__ float As[BLOCK_SIZE][BLOCK_SIZE];

// Declaration of the shared memory array Bs used to store the sub-matrix of B

__shared__ float Bs[BLOCK_SIZE][BLOCK_SIZE];

As[ty][tx] = A[a + wA * ty + tx];

Bs[ty],[tx] = B[b + wB * ty + tx];

// Synchronize to make sure the matrices are loaded

__syncthreads();

// multiply two matrices together; each thread computes one element of sub-matrix

#pragma unroll

for (int k = 0; k < BLOCK_SIZE; ++k)

Csub += As[ty][k] * Bs[k][tx];

// Synchronize to make sure that the preceding computation is done

__syncthreads();

}

// Write the block sub-matrix to device memory; each thread only writes one element!

int c = wB * BLOCK_SIZE * by + BLOCK_SIZE * bx;

C[c + wB * ty + tx] = Csub;

}

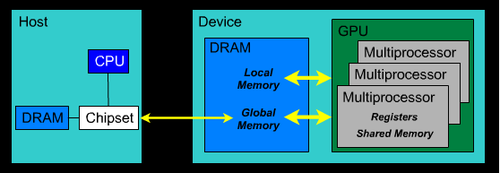

GPU memory architecture [1]

CUDA memory architecture

All multiprocessors of the GPU device access a large global device memory for both gather and scatter operations. This memory is relatively slow because it does not provide caching. Shared memory is fast compared to device memory and normally takes the same amount of time as required to access registers. Shared memory is "local" to each multiprocessor, unlike device memory, and allows more efficient local synchronization. It is divided into many parts. Each thread block within the multiprocessor accesses its own part of the shared memory and this part of shared memory is not accessible by any other thread block of this or another multiprocessor. All threads within a thread block that have the same lifetime as the block share this part of memory for both read and write operations. To declare variables in shared memory the "__shared__" qualifier is used and to declare in global memory the "__device__" qualifier is used.

CPU code invoking CUDA kernel code

void invoke_matrixMul(int size){

int devID;

cudaDeviceProp props;

checkCudaErrors(cudaGetDevice(&devID));

checkCudaErrors(cudaGetDeviceProperties(&props, devID));

int block_size = (props.major < 2) ? 16 : 32;

unsigned int uiWA, uiHA, uiWB, uiHB, uiWC, uiHC;

uiWA = uiHA= uiWB = uiHB = uiWC = uiHC;

// allocate host memory for matrices A and B

unsigned int size_A = uiWA * uiHA;

unsigned int mem_size_A = sizeof(float) * size_A;

float* h_A = (float*)malloc(mem_size_A);

unsigned int size_B = uiWB * uiHB;

unsigned int mem_size_B = sizeof(float) * size_B;

float* h_B = (float*)malloc(mem_size_B);

// initialize host memory

srand(2012);

randomInit(h_A, size_A);

randomInit(h_B, size_B);

// allocate device memory

float* d_A, *d_B, *d_C;

unsigned int size_C = uiWC * uiHC;

unsigned int mem_size_C = sizeof(float) * size_C;

// allocate host memory for the result

float* h_C = (float*) malloc(mem_size_C);

float* h_CUBLAS = (float*) malloc(mem_size_C);

checkCudaErrors(cudaMalloc((void**) &d_A, mem_size_A));

checkCudaErrors(cudaMalloc((void**) &d_B, mem_size_B));

// copy host memory to device

checkCudaErrors(cudaMemcpy(d_A, h_A, mem_size_A, cudaMemcpyHostToDevice) );

checkCudaErrors(cudaMemcpy(d_B, h_B, mem_size_B, cudaMemcpyHostToDevice) );

checkCudaErrors(cudaMalloc((void**) &d_C, mem_size_C));

// setup execution parameters

dim3 threads(block_size, block_size);

dim3 grid(uiWC / threads.x, uiHC / threads.y);

//Performs warmup operation using matrixMul CUDA kernel

if (block_size 16) {

matrixMul<16><<< grid, threads >>>(d_C, d_A, d_B, uiWA, uiWB);

} else {

matrixMul<32><<< grid, threads >>>(d_C, d_A, d_B, uiWA, uiWB);

}

cudaDeviceSynchronize();

// clean up memory

free(h_A);

free(h_B);

free(h_C);

}

References:

[1] High Performance Computing with CUDA, 2009 User Group Conference

[2] http://www.nvidia.com/content/global/global.php

Source code: git clone git@github.com:futuregrid/GPU.git

Usage:

module load cuda

module load intel

nvcc -c matrixMul.cu -L/opt/cuda/lib64 -lcudart

This is document bcgu in the Knowledge Base.

Last modified on 2018-01-18 17:09:09.