IU Bloomington Data Center Enterprise Pod specifications

Supporting: Academic and administrative business critical systems, and campus computing system-wide

Executive summary

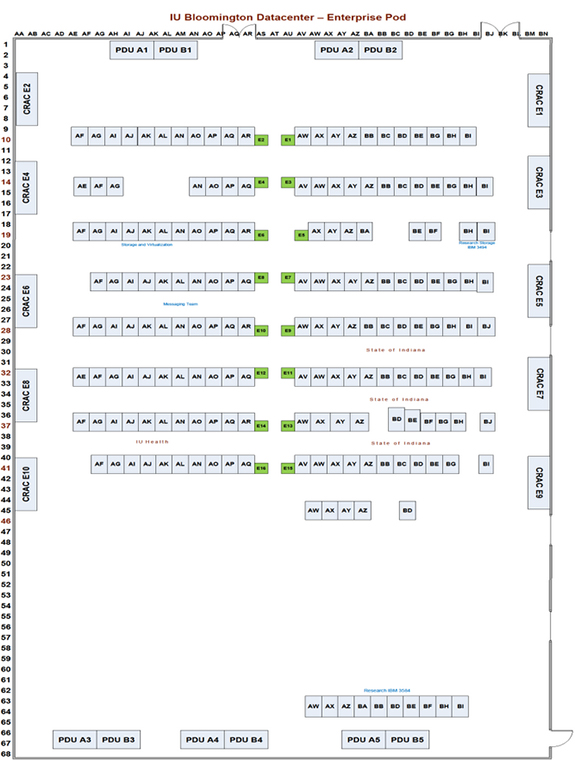

The Enterprise Pod (EP) Data Center at Indiana University Bloomington serves business critical systems and houses host systems that may fall under Federal or other data protection requirements. This may include, but is not limited to: FERPA, PCI, HIPAA. The EP is serviced by UPS and backup generators. The EP provides the campus computing system with 105 computer cabinets. UITS has co-location contracts with IU Health and the State of Indiana (IOT), with 70 computer cabinets supporting power as high as 15 kW per cabinet.

The EP is intended to, at minimum, support NIST Moderate and similar physical compliance requirements. UITS will work with each client to mutually support compliance as required.

EP key specifications

- Provide capacity for 175 cabinets (540 kW)

- Cabinet maximum power capacity varies between 5-15 kW, depending on the location

- Cabinet voltage capacity: 208 V

Note:110 V will have extremely limited support

- UPS/flywheel capacity: 20-30 seconds of run time at 100% load

- Generator capacity is available to support the entire EP

- Cabinet weight ratings:

- Maximum compute aisle floor loading is 312 lbs./sq. ft.

- Example: 312 lbs. * (8) sq. ft. cabinet = 2,496 lbs.

- Cooling is provided by chilled water, computer room air handler (CRAH) cooling units

- Room operating temperature is 72° F

Assumptions

- All clients are responsible for data protection, integrity, and destruction.

- All business critical systems are required to support redundant power supplies.

- Where possible, clients will use the Data Center rack management standards supporting common power grounding/bonding, cabinet interconnection, and internal rack wiring. Cables should be neatly dressed. All cables should be properly labeled so they can be easily identified. Refer to "TIA/EIA-942 Infrastructure Standard for Data Centers", section 5.11.

- Cabinet weight ratings will adhere to the raised floor, receiving, and staging room limitations.

- Generator is available for power backup.

- All third-party vendors will adhere to the design criteria of EP.

- Any customization to EP space will be funded by project owners and managed by UITS.

Lead times

The average lead time for a cabinet solution is four to six weeks, depending on quantity. UITS will work with each client to minimize lead time. If desired, clients may pre-purchase standard equipment, which would be installed on the compute floor in preparation for any pending server installations.

EP RFP specifications

Indiana University EPOD general requirements:

- Cabinet general requirements:

- All cabinets to be APC model AR3157 29"W 42"D and 48RU tall

- All cabinets to be no taller than 48RU tall

- Maximum weight ratings of each cabinet not to exceed 280 lbs./sq. ft.

- All cabinets to be weighed and details provide prior to shipment

- All cabinets will have actual weight clearly marked

- Each project to provide inside or "white glove" delivery of all equipment

- Project is responsible to remove all packing material and trash; recommend vendor be held responsible for this effort

- Loaded equipment cabinets will be delivered directly to the computer floor

- Cabinet power:

- All devices to accommodate 208 V

- Each cabinet maximum power load can be obtained by contacting Data Center Operations (DCOPS)

- All standard cabinet distribution units (CDUs) will be single-phase power distribution; three-phase available

- CDU input connectors will use an L6-30, 30 amp

- All computer power cables are to be C13/C14 or C19/C20

- All CDUs, at minimum, provide for monitoring total power; temperature, humidity optional

- Outlet level monitoring is optional

- Switched outlets are optional

- Cooling: The Data Center is cooled with the use of chilled water delivered to CRAH units; see the floor plan below for configuration details.

- Network:

- Copper and fiber integration to follow university standards

- Network egress to campus will use standard infrastructure through the "network" cabinets in each row

- Network cabling will be mounted in the overhead cable management system

- Project to provide patch cables

This is document bhqf in the Knowledge Base.

Last modified on 2023-07-21 15:29:15.